DATA 5207

Lecture 2

presenting data to non-expert (visualization)

less technical knowledge

making data engage

convey the pattern

Data Graphics

3 consideration

what information want to communicate

who is the target audience

why design this feature relevant

Lecture 3

confounding factors: earning by height, may it occur by gender

select the topic this weekend

RMD template

Lecture 4

better R square, better job the model does. This means the better-fitting in model

maximize the variables in the model

not only use the technic thing to fit the data but adding the theoretical thing to increase the use in practice

observational data can not make the causal inference (confounding factor included)

model error

random errors (precision limitation - sample number)

systematic error (error in research design - non-sampling error)

difference between observed and actual

response, instrument, interviewer

sample design error

selection, frame

explore dataset graphs

variables

model choice

correlation plot all variables

variable selection methods - stepwise, lasso, or

Lecture 5

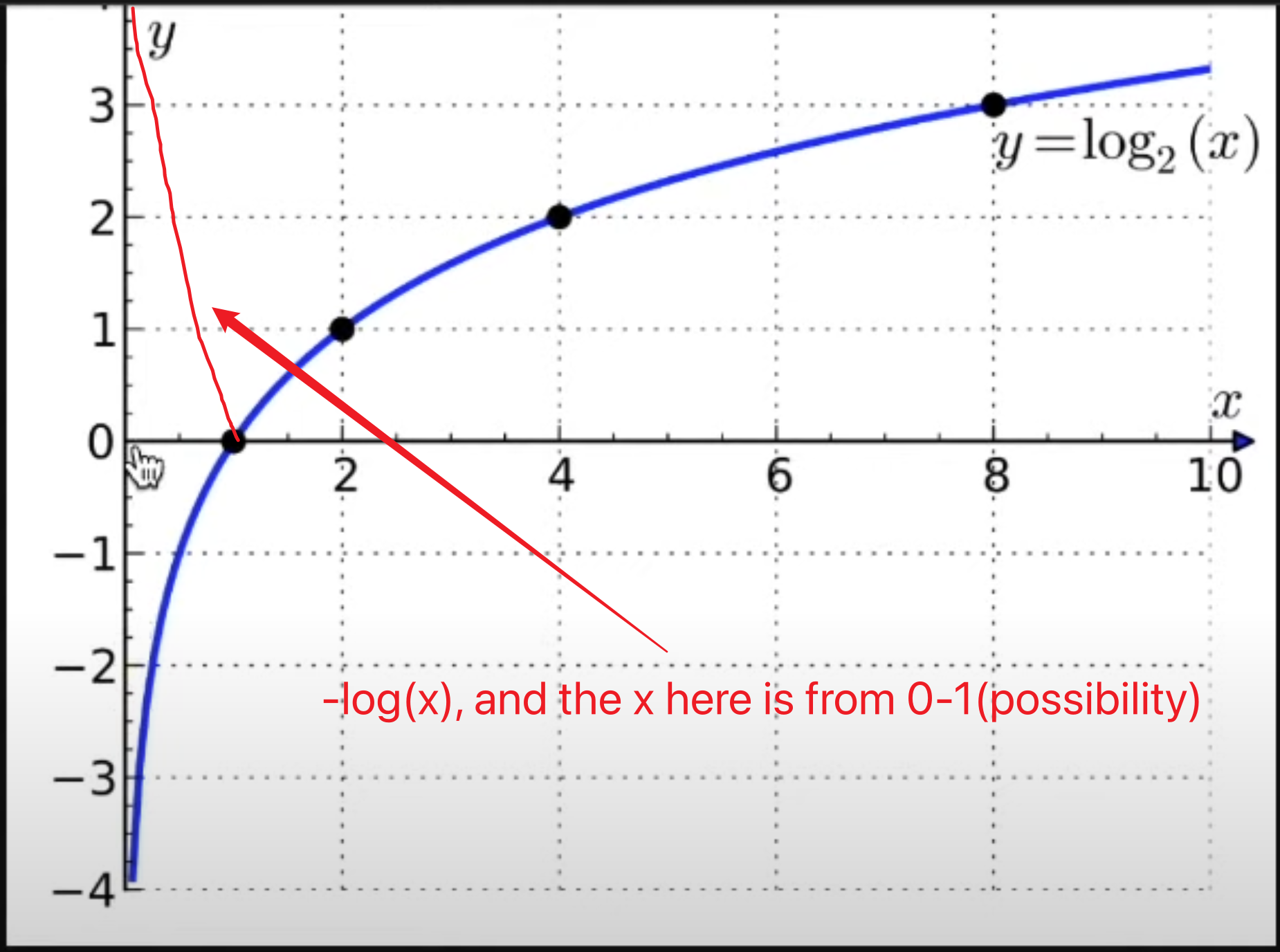

limitation of the LR is assuming the relationship is linear

logits?

ordinal logistic regression (agree, very agree, etc)

For the material in lab 5, the last image can be repainted as for each year, plot the importance of each variable (independent factor) into a single panel.

Lecture 6

fuzzyjoin is a function that similar operation in SQL

Research Plan Format

Format

Hide the R chunks, the template has the code to hide

key feature should be identified

why use the LR

Literature

theory from Literature

hypothesis is for testing? is that the previous section provided

literature: tells you, communicate the hypothesis you provide

inform the things you need to do

underpin the thing you want to explain

may error in the literature section, falsify the idea

Data

api missing

operation

Limitation

can be deleted

Lecture 7 Quiz week

data can from

consumer data

social media

AB testing (to decide the better version in different versions)

for instance, the color, and size of the button may sent to users, and the amount they click to decide the better version

census

individuals include surveys

web scraping

Lecture 8

survey

system error - no random

may younger people be more likely to respond to the phone (phone survey) - nonresponse bias

The census is not like the survey, due to its not doing the data sample, according to the entire residents in the country

random error refers to sampling

Assignment-1

- Economic: Q288, 50 (income)

- 1

- Occupation: Q281, 282, 283

- Education: Q275

- https://d1wqtxts1xzle7.cloudfront.net/49101438/18.01.053.20160304-libre.pdf?1474797472=&response-content-disposition=inline%3B+filename%3DEffect_of_Education_on_Quality_of_Life_a.pdf&Expires=1713509378&Signature=bTvJ

0cklHa83ixDEhUTW02gYB4KW0iex7Mx6etlJqBNha-f0l-gvirWcVjlpbtaXdn5SsFoSsWtjeay-18z5De6i3e2wRtZvtx5cuzyJe2RLJHKYPPXrkiEORhb9c35JK-WjFa7T8c8OIQj5RxD11Gj3W7wCsC3jJwVOewTDYwkVBKXC1-7BjpWcbOSrkZnazJwulzVzLIERo0l6iO51LIqFi6wY8TSiTTdFGhiHctf9bu2Y7IapgVAwDLKXbpYTdXd3c4nVMPqQryYQ5iOjKVEmcCdMQwn0HUGe837Dn38-7ttCIbNASUOgpjEGQEjmNlznMsOW9jG~X9VHjw__&Key-Pair-Id=APKAJLOHF5GGSLRBV4ZA - https://www.sciencedirect.com/science/article/pii/S2214804314001153?via%3Dihub

- https://d1wqtxts1xzle7.cloudfront.net/49101438/18.01.053.20160304-libre.pdf?1474797472=&response-content-disposition=inline%3B+filename%3DEffect_of_Education_on_Quality_of_Life_a.pdf&Expires=1713509378&Signature=bTvJ

- Societal Wellbeing: Q47 (health)

- Security: Q131-138, 52

- Social capital, trust: Q57-61

- https://link.springer.com/article/10.1007/s00148-007-0146-7 (neighbourhood only)

PCA to combine the multiple variables into one feature

Lecture 10

cable library in R

Lecture 11 - Causality

Lecture 12 - Journalism

datasplash platform

Final Project

the plots and tables can be included in the report

using the table to regression result (kable) function